Breaking New Ground: Meta’s Llama 3.3 70B Debuts in Amazon SageMaker JumpStart

In a thrilling development for the AI landscape, the esteemed Llama 3.3 70B from Meta officially graces Amazon SageMaker JumpStart, promising to stir the waters of large language model (LLM) advancements. This latest iteration, Llama 3.3 70B, is not just another model—it’s a formidable contender, showcasing exceptional performance akin to its heftier counterparts while drastically reducing computational demands.

A Closer Look at Llama 3.3 70B

Llama 3.3 70B isn’t merely an incremental improvement; it epitomizes a remarkable leap in efficiency and output quality. Imagine this: it delivers results nearly on par with the Llama 3.1 405B, yet it requires a mere fraction of the resources. According to insights from Meta, this windfall in efficiency translates into a staggering reduction in cost—almost fivefold for inference operations—making this model an irresistible choice for enterprises eager for smart production deployments.

The architecture of Llama 3.3 70B is nothing short of revolutionary, built upon an optimized transformer design that features an enhanced attention mechanism, all aimed at significantly curtailing inference costs. Meta’s engineering expertise shines through—trained on a gargantuan dataset of around 15 trillion tokens, this model seamlessly melds web-sourced data with over 25 million synthetic examples, cultivating an unparalleled understanding and generation proficiency across myriad tasks.

A key differentiator for Llama 3.3 70B lies within its meticulous training regimen. The model underwent a thorough supervised fine-tuning process, augmented by Reinforcement Learning informed by Human Feedback (RLHF), thereby synchronizing its outputs with human expectations without sacrificing performance. When pitted against its larger sibling, Llama 3.3 70B consistently demonstrated remarkable prowess, boasting less than a 2% deficit in six out of ten recognized benchmarks and even outshining it in three.

Embarking on Your Journey with SageMaker JumpStart

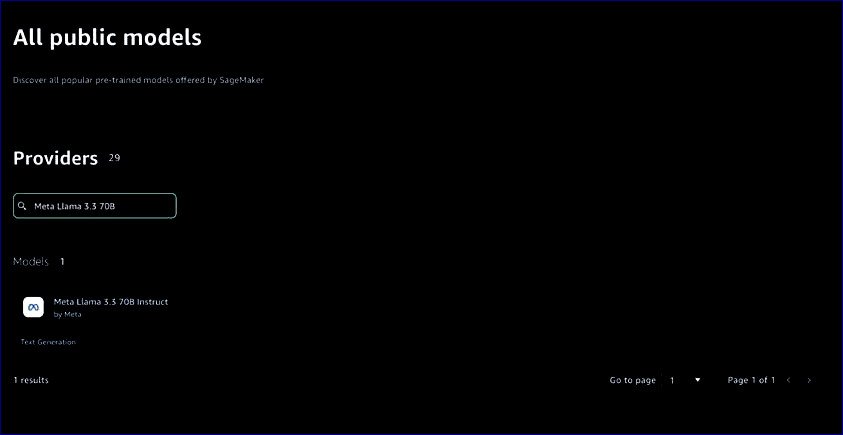

Ready to dive into the world of machine learning? Enter SageMaker JumpStart—a cutting-edge hub engineered to catapult your ML endeavors into high gear. Within this ecosystem, you can evaluate, juxtapose, and tailor pre-trained foundation models, including the illustrious Llama 3 models, customizing them to fit your envisioned applications.

Deploying Llama 3.3 70B can be executed through two streamlined routes: a user-friendly interface or an automated programming approach via the SageMaker Python SDK. Let’s dissect both avenues to find your perfect match.

Option 1: Deploying Llama 3.3 70B via the SageMaker JumpStart UI

- Access SageMaker JumpStart through Amazon SageMaker Unified Studio or SageMaker Studio.

- Navigate to JumpStart models in the Build menu.

- Locate the Meta Llama 3.3 70B model.

- Hit the Deploy button.

- Agree to the end-user license agreement (EULA).

- Select your instance type (either ml.g5.48xlarge or ml.p4d.24xlarge).

- Finalize by clicking Deploy.

Simply wait for the endpoint status to signify “InService,” and you’re ready to unleash the model for inference tasks!

Option 2: Automating Deployment with the SageMaker Python SDK

For organizations keen on streamlining their MLOps pipelines, the below snippet illustrates how to deploy the model programmatically:

from sagemaker.serve.builder.model_builder import ModelBuilder

from sagemaker.serve.builder.schema_builder import SchemaBuilder

from sagemaker.jumpstart.model import ModelAccessConfig

from sagemaker.session import Session

import logging

sagemaker_session = Session()

artifacts_bucket_name = sagemaker_session.default_bucket()

execution_role_arn = sagemaker_session.get_caller_identity_arn()

# Model details

js_model_id = "meta-textgeneration-llama-3-3-70b-instruct"

gpu_instance_type = "ml.p4d.24xlarge"

# Sample inputs and response

response = "Hello, I'm a language model, and I'm here to help you with your English."

sample_input = {

"inputs": "Hello, I'm a language model,",

"parameters": {"max_new_tokens": 128, "top_p": 0.9, "temperature": 0.6},

}

sample_output = [{"generated_text": response}]

# Build and deploy the model

schema_builder = SchemaBuilder(sample_input, sample_output)

model_builder = ModelBuilder(model=js_model_id, schema_builder=schema_builder, sagemaker_session=sagemaker_session, role_arn=execution_role_arn, log_level=logging.ERROR)

model = model_builder.build()

predictor = model.deploy(model_access_configs={js_model_id:ModelAccessConfig(accept_eula=True)}, accept_eula=True)

predictor.predict(sample_input)Unlocking Cost Savings: Scaling Down to Zero

As an added layer of sophistication, you can configure auto scaling to reduce resource utilization to zero after deployment—thus ensuring maximized cost efficiency.

Elevating Deployment with SageMaker AI

SageMaker AI steps in to elevate the deployment experience of intricate models such as Llama 3.3 70B, equipped with features that harmonize performance with cost-effectiveness. By harnessing its cutting-edge capabilities, enterprises can optimally outfit and oversee LLMs in active production settings.

One standout feature, Fast Model Loader, transforms the model initialization experience by implementing a revolutionary weight streaming mechanism. Instead of the traditional loading of the entire model into memory, this method enables weights to stream directly from Amazon S3 to the accelerator, thus accelerating startup efficiency.

Moreover, Container Caching eliminates common deployment bottlenecks by pre-caching container images, dramatically diminishing latency during scaling, particularly vital for extensive models like Llama 3.3 70B.

Lastly, the Scale to Zero feature provides intelligent resource allocation, automatically tuning compute capacity based on real-time usage. This groundbreaking approach not only slashes costs but affords quick scalability—a boon for organizations juggling multiple models or fluctuating workloads.

Conclusion

The fusion of Llama 3.3 70B with the robust deployment capabilities of SageMaker AI heralds a new era of production excellence. Organizations can harness this model’s high efficiency, coupled with groundbreaking deployment features, to achieve both stellar performance and attractive cost-savings in their AI endeavors.

We invite you to embark on this transformative journey with Llama 3.3 70B and share your revelations!

Meet the Innovators Behind the Scene

- Marc Karp: An ML Architect with the Amazon SageMaker Service team, dedicated to sculpting robust ML workloads.

- Saurabh Trikande: A Senior Product Manager passionate about democratizing AI and enhancing the accessibility of complex applications.

- Melanie Li, PhD: A Senior Generative AI Specialist Solutions Architect with a wealth of experience in pioneering AI initiatives.

- Adriana Simmons: A Senior Product Marketing Manager at AWS.

- Lokeshwaran Ravi: A Senior Deep Learning Compiler Engineer focused on optimizing and securing AI technologies.

- Yotam Moss: Software Development Manager for Inference at AWS AI.

Dive into the world of advanced machine learning with the pioneering work of these exceptional individuals!